February Progress Prizes and Updates

Our first set of progress prizes for 2025 features continued high-quality work from several dedicated contributors.

We also have some updates after the prizes section, so keep reading!

3 x $2,500 (Sestertius)

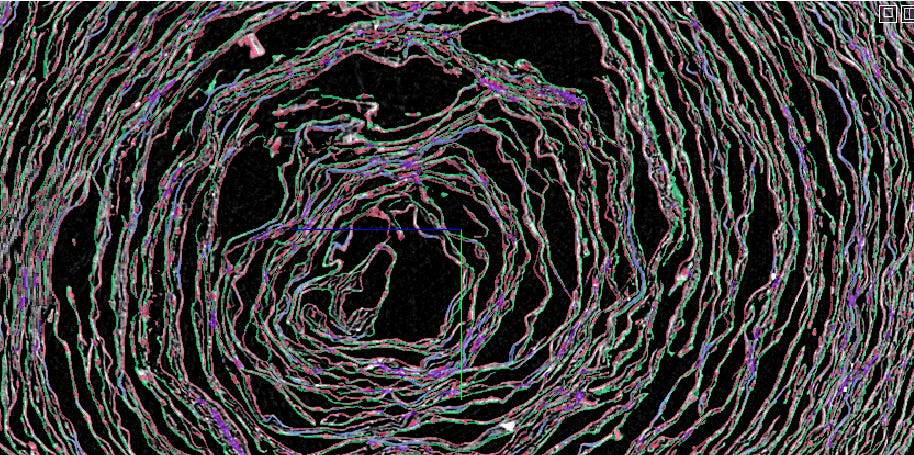

Johannes Rudolph has continued his development of vesuvius-gui, adding support for viewing large autosegmentations created by our surface tracing method like this scroll 4 automated unrolling attempt.

Hendrik Schilling released some bug fixes and Ubuntu packages for VC3D , enabling easier community access to the tooling. He also enabled 16-bit Zarr support for VC3D, reducing the data processing steps from download to startup and improved upon the FASP submission from last year.

Hendrik Schilling also added two new tools to VC3D:

vc_seg_add_overlap- this allows a user to add the required overlap information from an autogenerated trace, making it possible to use these as “superpatches” to quickly annotate large regions of the scroll as “valid” regions. This was a requested tool from our wish listvc_tiffxyz_upscale_grounding- this tool separates the inpainting and upscaling step

3 x $1,000 (Papyrus)

Forrest McDonald uploaded Zarr datasets of scrolls 1-5 and fragments 1-6 that have been masked of air/void space and the scroll case, contrast enhanced, denoised, normalized, and quantized in order to reduce file size, memory size, and preprocessing time. An optimized Scroll 1A requires ~100GB which includes downsized projections for ~20x on disk space saving.

Will Stevens continued to iterate on his flattening and interpolation work using particle simulations. Be sure to read the report included on the repository detailing the current state and future improvements. Nice work!

Hendrik Schilling added functionality for the i3d first letters model to his ink detection repository , and provided some additional bug fixes and documentation to VC3D.

February in review

Community

Our community continues to work hard at solving segmentation. Discord user ‘@hja’ has shared some early work creating segmentations with fibers.

We’ve also continued to host our office hours 2-3x weekly. It’s been a pleasure to be able to speak with more members of our community and the office-hours provide an easy way to find help or just chat scrolls. If you have any interest in our challenge, check out the calendar and join in! We’d love to hear from you.

Fiber Annotation

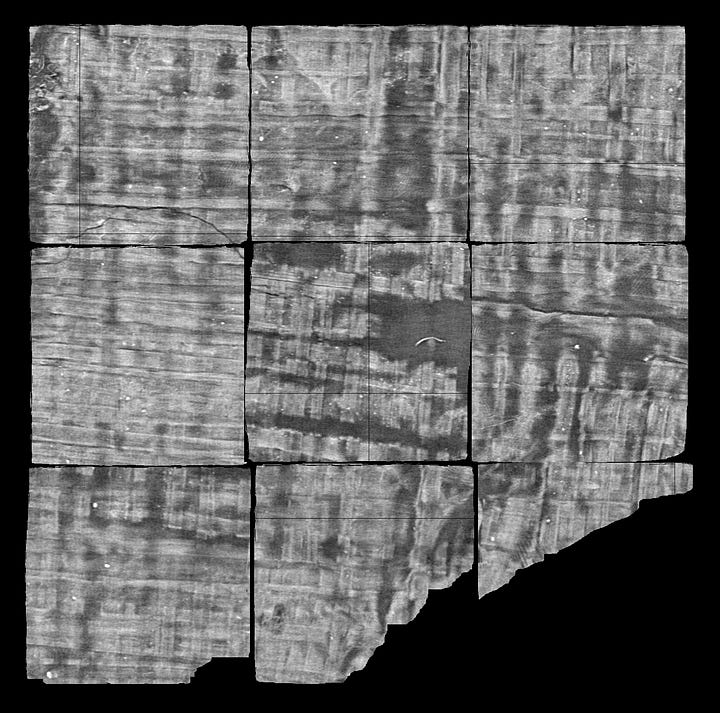

In February, we released Dataset002, an updated fiber annotation dataset building on Dataset001 with seven newly annotated cubes. The fiber datasets are formatted for direct integration into U-Net training. New for Dataset002 is additional label classifications for horizontal fibers, vertical fibers, and intersections, as assigned using this script.

Highlights from Dataset002 include two key 512 cubes: a folded spiral at the center of Scroll 5 and a blurry, compressed, torn region in Scroll 1a. Additionally, five 256 cubes from Scroll 5, spanning various difficulty levels, were included. Looking ahead to March, our focus will shift toward annotating fibers in Scrolls 3 and 5.

We trained a model on this new dataset, and uploaded the inference results for Scroll 1 and Scroll 5. The model performs quite well, but still needs some more work in compressed regions. Annotation continues here and we are optimistic that soon we’ll have a model that produces quality predictions even in the most difficult regions.

We’re focusing on fiber segmentations because we have found that we (humans) are able to annotate them even in more compressed regions where other labeling is difficult or impossible. By training on these labels, we want models to be able to output some structure in these regions that can be used for the downstream assembly stages of segmentation.

Take a look at the predictions on neuroglancer!

Scanning

Significant effort has been put into streamlining our scanning pipeline. Previously when preparing to scan a scroll, designing a transport and scan case for a single scroll took around 20 completely manual steps, each potentially prone to error and including some subjective choices about different parameters.

Thanks to incredible work by Alex Koen and our very own Giorgio Angelotti, we now have an entirely automated process to take photogrammetric scans of scrolls and turn them into scroll cases. Huge thanks to both of these guys for their contributions in this necessary step!

Following this redesign of the scroll case generation, we ordered the scroll cases for an upcoming scan session later this month at Diamond Light Source and began the process of fixturing the scrolls within their scan cases. We are thrilled with the results. Our cases produced from this pipeline have fit the scrolls perfectly.

Later this month, we plan to scan the most scrolls ever scanned in a single session by a wide margin! As a preview, here’s a look at some scroll models and cases, automatically generated by our improved pipeline.

Following the scan session, we will share the resulting scans with the community as quickly as possible! We’ve been working on streamlining and automating the reconstruction steps to enable this.

We’re very excited about this work, and it would be impossible without the close collaboration of our partners at the Biblioteca Nazionale Vittorio Emanuele III, the University of Naples Federico II, and EduceLab at the University of Kentucky. Thank you all!

Representation

Giorgio continued to push us forward on representations by training a single model on the following:

recto surface

verso surface

horizontal fibers

vertical fibers

possible intersection (high proximity) of any of the previous classes

We ran inference on Scroll 1, and uploaded the data to the data server here.

The model performs quite well, and we’re hoping to continue to improve our multi-class or multi-task models, so that rather than running a bunch of inference jobs with different models, we can run them once. Models that have to learn multiple tasks or classes also can sometimes outperform ones that do not, as the encoder is forced to learn features that differentiate them.

Ink Detection

We’ve continued to pursue highly accurate 2D labeling methods with the plan of taking these 2D predictions ran on multiple layers to produce highly localized 3D labels. The 2D labels are created using crackle-viewer and flood filling the crackle features on the surface. These labels differ from our normal labels in that they are specific to only features that we can verify exist on the papyrus surface.

Traditionally, we have labeled by drawing out the full letter as we believe it exists, in hopes of catching some unseeable feature on the surface that a model could pick up. This has worked very well, but leaves us unable to truly localize the letter in 3d, making 3d training somewhat difficult. We hope to be able to run inference on 2d layers and then turn these into true 3d labels.

Early training results have been quite promising! The 2D model outputs, run on individual layers and projected into a single one capture a significant amount of ink compared with the GP model from 2023, and even provide some signal where the GP model misses. Of course, the GP model still outperforms it overall, but this is a promising early sign that the idea should work, even with relatively few labels.

Youssef Nader also fine-tuned DINOv2 on these labels, and produced a very nice image of the ink on Scroll 1 segment 20231210121321.

The training dataset is available here and the current trained model is available here.

Hiring

We are very excited to announce that Johannes Rudolph has joined our team as a platform engineer! Johannes brings a much needed eye for detail and standardization, and a set of skills perfectly suited to helping us improve our ability to process, host, and serve our data throughout the pipeline from scanning to ink. Welcome Johannes!

We’re still hiring for the following roles:

If you or someone you know would be a good fit for these, please reach out!