It has never been easier to look inside ancient scrolls

Also: more prizes awarded!

We think we work with one of the coolest datasets out there. Insanely high resolution micro-CT scans of ancient scrolls? Every time you look at a new section of data, you are the first person to see this piece of scroll in 2,000 years.

It’s amazing. But it hasn’t been super easy to jump in. You have to read about the data structure, navigate the data server, download some files (maybe buy a hard drive), etc. What if you just want to take a look, like, right now?

Well, now you can:

Read on to learn more, and don’t miss the autosegmentation update and Progress Prize awards!

Ancient scrolls in the browser

We’re now hosting some versions of the scroll volumes that are optimized for visualization and partial access. These volumes are stored in 3D chunks, so you only have to request the bits you need, plus they’re multiresolu - oh, you get the idea. Don’t you want to see one?

Check it out: View the inside of PHerc. Paris. 4 (Scroll 1)

This uses Neuroglancer, a visualization and segmentation tool for connectomics, to easily browse through the scroll interior. You can pan by clicking and dragging, and can zoom by scrolling. It’s even possible to rotate the slice plane to arbitrary views with Shift + drag.

One nice bonus: if you navigate to something interesting, and then share the URL with others, they will be able to see what you were seeing! In addition to multiple scroll volumes, there are some 3D ink predictions and surface volumes available in this way, too. Check out some items of interest: Scroll 2, Scroll 3, Scroll 4, Scroll 1 flat papyrus, Scroll 1 crackle, Scroll 1 3D ink predictions, and a Scroll 1 segment.

Happy browsing, and share your findings with us!

Ancient scrolls in Python (or C)

We’re also releasing vesuvius, a Python library to make it easier than ever to interact with scroll data programmatically. It just takes a line or two:

import vesuvius

import matplotlib.pyplot as plt

scroll = vesuvius.Volume("Scroll1")

img = scroll[1000,:,:]

plt.imshow(img)

To get started, we recommend checking out the repository, along with these introductory notebooks:

📊 Scroll Data Access: an introduction to accessing scroll data using a few lines of Python!

✒️ Ink Detection: load and visualize segments with ink labels, and train models to detect ink in CT.

🧩 Volumetric instance segmentation cubes: how to access instance-annotated cubes with the

Cubeclass, used for volumetric segmentation approaches.

Thank you, Giorgio (@Jordi)!

There’s also the vesuvius-c library, if C is your cup of tea.

Let us know what you think, and we accept pull requests!

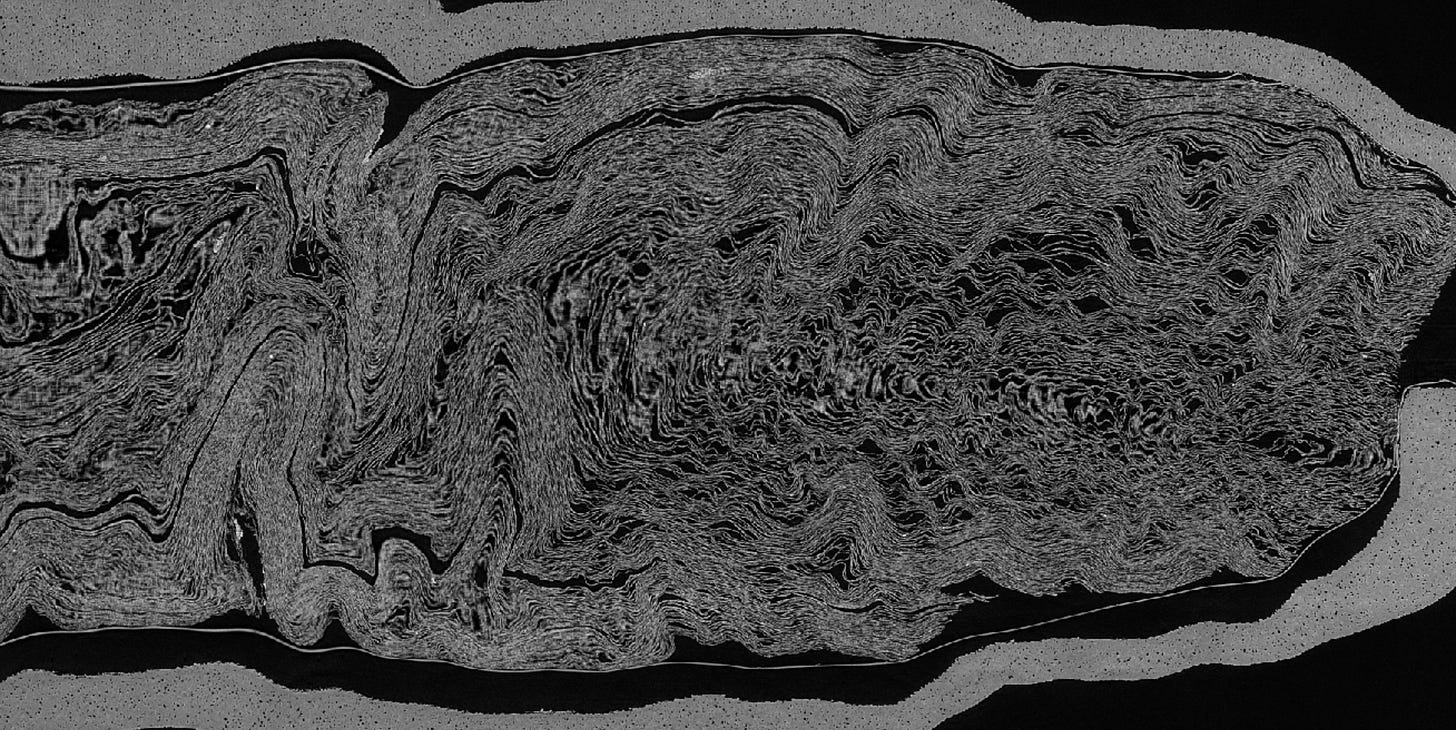

Autosegmentation update + help us solve it!

Our own Julian Schilliger (@RICHI) and team have been busy developing algorithms for autosegmentation. Check out the latest from Scroll 1! This shows an unrolling of most of the scroll, and a lot of ink can be seen throughout. The segmentation is not perfect yet and jumps between sheets, so a lot gets mixed together in this view of the resulting text. More work to be done! But very exciting! More info here.

We need your help to push this across the finish line! If you are interested in contributing to autosegmentation (AKA recovering entire ancient scrolls from the ashes), there are additional new resources to help introduce you to the current frontier. Please check out the Sheet Stitching Problem Playground and associated problem statement!

July Progress Prizes!

As always, the Vesuvius Challenge community is hard at work across various projects that are helping us recover the lost library of the Herculaneum scrolls. This month we are awarding the following prizes for this work!

4 x $2,500 (Sestertius)

James Darby (@james darby) added yet more features to the custom Napari extension for creating volumetric instance segmentation labels! Our segmentation team is now using this to create more annotated cubes for instance segmentation approaches. It is also now possible to load, inspect, and clean up 3D ink labels! More information here.

Jared Landau (@tetradrachm) created Vesuvius GP+, an extended implementation of the 2023 Grand Prize-winning ink detection approach that is designed to simplify the new user experience for those looking to get started with ink detection. In addition to the repository documentation, an additional report guides the reader through some experiments and their outputs. We are excited to see work to make ink detection more accessible!

Jorge García Carrasco (@jgcarrasco) created a notebook showing that DINO image features, not trained on any scroll-specific data, can detect some ink! Generating DINO features for scroll segments and then viewing some of their principal components from PCA can directly detect the “crackle” pattern that often corresponds to ink in Scroll 1.

Forrest McDonald (@verditelabs) has a number of feature updates for Hraun, a set of Python tools for handling volumetric scroll data. Check out the updates here, which include volume rendering, 3D ink hunting, guided 3D segmentation, Zarr support, and more!

$1,000 (Papyrus)

Yao Hsiao (@Yao Hsiao) and Dalufishe (@Dalufish) created a web based tool to view and annotate cubes of scroll data. Find more information on Discord!

Wrap up

Don’t forget to submit your projects this month for progress prizes too!

Let us know how you find the new tools - our goal is to make it easier and easier to get started with ancient scrolls!