State of segmentation and rendering

Also: new tutorials and resources!

The Vesuvius Challenge team, community, and scholars have kept busy lately! We are kicking off this week by taking a look at the current states of the art for segmentation, autosegmentation, and rendering. This one gets technical - if you have questions, come ask them in our Discord server or check out the tutorials and other resources on our website!

Segmentation

@Hari_Seldon reports on recent segmentation efforts:

On the manual segmentation side the Segmentation Team, once again, is running into a wall with what can be manually segmented. This has happened three times before, and was overcome: @Seth P. made it possible to segment at all with the monumental effort of creating Volume Cartographer (VC) from scratch; @RICHI improved the speed and accuracy of VC by several magnitudes; and @spacegaier added well-tailored functionality to allow us to penetrate semi-compressed papyrus regions in finite time. Huge kudos to our VC Heroes!

To overcome the current wall we require new tooling. This could look like improving VC, improving Khartes, or developing entirely new software. The biggest problems are: papyrus regions being off-axis to the slice orientation, the frequency of fraying/rippling regions throughout, and compressed regions where adjacent papyrus layers are tightly pressed together. We have detailed some ideas to tackle these on our wish list. Please reach out on our Discord server if you have the volition to help. There is fame and fortune to be won!

Let us look at our segmentation progress to date:

Scroll 1 - 1200 sq. cm (~5% of the scroll)

Scroll 2 - 50 sq. cm (~0%)

Scroll 3 - 2 sq. cm (~0%)

Scroll 4 - 700 sq. cm (~75%)

As you can see, there is much work ahead of us. We are approaching our limits on Scrolls 1, 2, and 4, with only minor sections left that can be manually segmented in a realistic time frame. We are now getting started on the wild data set that is Scroll 3. We expect to make quite slow - but steady - progress segmenting it, and our hope is that software development will forge ahead and open new doors as we go. Check out the new segmentation tutorial where we explore Scroll 3 with Volume Cartographer:

There will also be manually annotated volumetric data sets emerging over the coming weeks, as these chunked data formats are useful for input into many autosegmentation algorithms, and volumetric labeling of papyrus is excellent for deep learning experiments. This is another area where software advancements would be a great help, as 3D annotation is a real challenge!

Autosegmentation

@RICHI breaks down the latest in autosegmentation:

Currently, we see some general structure emerging in the development of autosegmentation. To process the large 3D volume of the scroll scan, most approaches first split the volume into chunks, or subvolumes. Those subvolumes then get processed individually in one way or another. Approaches that are being tested include 3D volumetric instance segmentation of the papyrus sheets, and point cloud extraction of the same (either the sheet surface or the sheet center).

In the next step, those local segmentations need to be combined (or “stitched”), associating small segmented sheet patches with their neighbors from the same sheet. This is an active area that needs a lot of improvements. The goal is to find a solution without false positive stitches, which erroneously jump between the different actual sheets of the scroll, making the text incoherent.

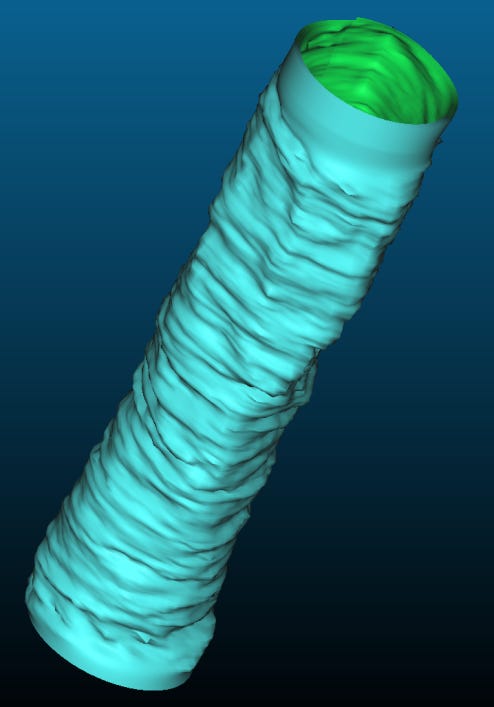

In a final step, the stitched segmentation has to be translated into a mesh representing the complete surface. Poisson surface reconstruction is often used, but does not always produce manifold meshes (a prerequisite for some downstream steps). Another approach is to take advantage of the vertex coordinates during the meshing step. ThaumatoAnakalyptor recently got an update that does this, using the 3D vertex coordinate as well as its angle with respect to the scroll center (or “umbilicus”) to create meshes that are manifold and do not self-intersect.

Following segmentation, the mesh is unrolled ("flattened") and rendered. Recently, fast rendering scripts are emerging that have been improved by multiple people (see below!). There has lately also been discussion of using different disk representations for the scrolls and the rendered surface volumes. Compression might dramatically reduce the disk space needed to work on the scrolls without losing the important information of the scan.

Rendering

@RICHI also shares some rendering scripts from himself and @Jordi:

First is mesh_to_surface.py, an alternative to vc_render for transforming segmented meshes into rendered “surface volumes” containing the volumetric data along that surface. This script leverages the GPU to accelerate the rendering computation, resulting in much faster renders.

Example usage:

python3 -m ThaumatoAnakalyptor.mesh_to_surface /home/Scroll1.volpkg/paths/20231005123336/20231005123336.obj /home/Scroll1.volpkg/volume_grids/20230205180739 --format jpg --display--format can specify the output format for the rendered surface volume. Tested: (tif, jpg). Untested: (numpy, zarr).

--display enables real-time rendering display.

Note: this script uses @spelufo’s 500x500x500 grid cells as input.

There’s also large_mesh_to_surface.py, which performs the same rendering process but first breaks down the mesh into smaller pieces so that the output images have more reasonable file sizes (< 4 GB) for large meshes.

Example:

python3 -m ThaumatoAnakalyptor.large_mesh_to_surface --input_mesh /home/Scroll1.volpkg/paths/20231005123336/20231005123336.obj --grid_cell /home/Scroll1.volpkg/volume_grids/20230205180739 --format jpg --displayHappy June - let’s read some scrolls!!